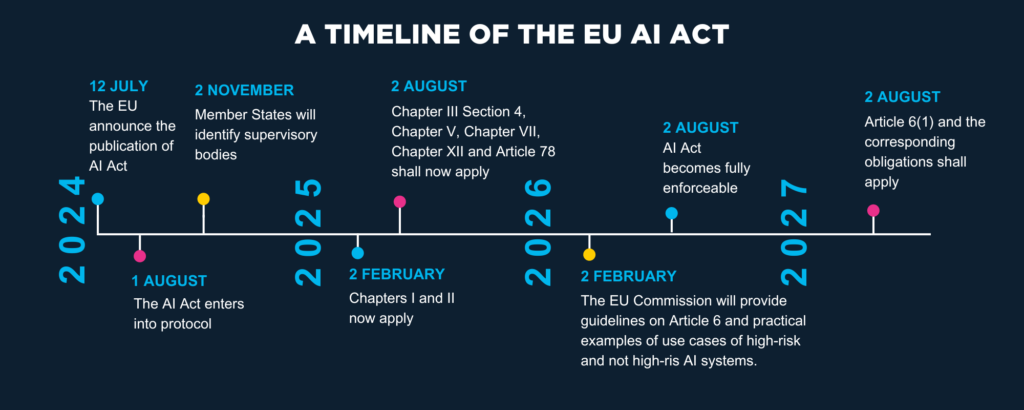

The European Union announced the publication of the Artificial Intelligence Act (EU AI Act) on 12 July 2024. This Act seeks to govern the development, market placement and use of AI systems under the mandate of the EU. Whilst this protocol comes into effect on 1 August 2024, it will be the key milestones for complying with the regulation that are of interest to financial institutions operating within this space.

What are the key deadlines?

The prescriptions of the Act will, in its entirety, apply from 2 August 2026, however, it is vital that financial institutions proactively manage efforts towards meeting its requirements, as informed by intermittent deadlines in the lead up to this date.

Deadline 1: 2 February 2025 – Initial compliance with general provisions and prohibited practices

As of 2 February 2025, organisations that are using AI systems must ensure that they are abiding by the standards outlined in the first two chapters of the Act concerning general provisions and prohibited practices. These sections describe to whom the regulation applies, critical definitions and actions which are prohibited when using AI systems. Financial institutions should start to think about how this impacts their existing working practices and how they can shape their products and services to responsibly optimise AI systems. The immediate focus for firms should be on eliminating the unacceptable risks identified from their business operations.

Deadline 2: 2 August 2025 – Enhanced accountability and transparency

As of 2 August 2025, companies that are leveraging AI will be held accountable to a greater degree regarding their use of AI systems. Financial institutions must comply with transparency and confidentiality requirements, in relation to general purpose AI models. The Act details governance requirements, corresponding penalties for breaches, notification requirements to relevant authorities and notified bodies. Firms should risk assess their activities against the possibility of breaching regulations and determine the subsequent operational impact of penalties on performance. Furthermore, it is crucial that organisations, intending on employing such systems, follow the required reporting protocols.

It is evident that firms must start to plan for these deadlines as the fines associated with breaching the regulations are substantial. For example, non-compliance with respect to prohibited AI practices, outlined in Article 5, can result in penalties of up to 35 million euros or 7% of the organisation’s annual turnover for the previous financial year – a fine that might have an immeasurable negative impact on business.

Deadline 3: 2 August 2027 – High risk AI systems full compliance

Whilst the Act becomes fully enforceable as of August 2026, the importance of compliance and the continued responsible use of AI systems remains critical. From 2 August 2027, the obligations associated with the implementation and management of high-risk AI systems will be binding, as set out in Article 6. This section (Chapter 3, Section 2) details the key components satisfying this requirement: Risk Management Systems; Data Governance; Technical Documentation; Record Keeping; Transparency; Human Oversight; Accuracy; and Cybersecurity. This provides ample opportunity for financial institutions to map a process for the responsible use of AI in principle and the European Commission plans to provide guidelines pertaining to the practical implementation of Article 6 by 2 February 2026.

The European Commission has an appetite for provide ongoing tailored guidance relating to the implementation of a variety of requirements as evidenced by article 96. It would be highly recommended that financial institutions engage with this practical guidance and align their AI strategy accordingly.

What next for financial institutions?

The overarching message behind this legislation is that proactivity is the key to compliance and to promote mutually beneficial outcomes, for both firms and the regulator. As such, outlined below are the next steps that financial institutions can take to kickstart their AI compliance processes.

Map out your AI utilisation

The first step firms might take is to map current use of AI systems, focusing on the areas that are impacted the most by the EU AI Act. In doing so, this will provide the organisation with a holistic view of where modifications to their AI strategy are necessary. For instance, if a firm highlights through the mapping process that they use AI for the provision of targeted job advertisements, a high-risk system, the firm can begin to strategise the implementation of the critical management components required to comply as outlined in Chapter 3, Section 2 of the Act.

Assess your applicability

Resulting from the mapping process, firms should assess the applicability of the various regulatory policies within the Act against their AI activities. This evaluation takes it a step further, considering the intricacies of the policy and assessing to what extent adjustments to the day-to-day operational use of AI are necessary. It is crucial that, at this stage, the focus is on the specific activities that the firm itself carries out, to substantiate an appropriately tailored approach.

Integration into your compliance program

The use of AI is becoming more and more popular, and it isn’t going away. To efficiently and effectively implement controls that will aid compliance with the Act, firms must integrate their approach to AI risks into their compliance program. What will this look like in practice?

- Incorporate assessment and remediation of AI risks into your training program;

- Utilise behavioural profiling and AI-assisted tools within existing controls;

- Proceduralise periodic reviews of associated risks;

- Introduce a compliance monitoring process specific to use of AI; and

- Integrate approach to AI into internal data protection and cybersecurity policy

The EU AI Act: A significant step forward in regulation

The general sentiment toward the regulation and associated compliance effort may initially seem daunting. However, it’s clear that the Act aims to protect firms rather than punish them.

Firms already using AI will now have the chance to refine their approach by incorporating comprehensive risk management of their systems to meet the deadlines, all whilst maintaining regular business-as-usual operations. The key to staying aligned with the EU AI Act, and benefiting from its protective measures, is to engage proactively today.

This post contains a general summary of advice and is not a complete or definitive statement of the law. Specific advice should be obtained where appropriate.